Four drums you play with your arms. Camera at the center of the board. No speakers.

GitHub

- App code (Python + MoveNet): GitHub

What I tried first, and why I stopped

I wanted to read a dancer’s internal tempo and generate music to match it. I used MoveNet keypoints, filtered hip motion, and tried to find a stable BPM with peak picking and autocorrelation. It worked in a narrow lab case and fell apart with real people. Small motions, irregular timing, and pose noise beat the algorithm. I paused the tempo idea and moved to discrete gestures.

The working interaction

I kept it simple: four diagonals. Up-left, up-right, down-left, down-right. Each maps to one drum: bass, tambourine, wood block, cymbal.

The program builds a body frame from the shoulders. It checks if the wrist points toward the target diagonal with enough reach and a straight-ish elbow. If the arm holds that region for a short dwell, the drum fires once. If the arm returns toward the torso, the gesture re-arms. Repeats are deliberate, not accidental. You can retrigger the same gesture without doing a different one first.

**[Photo: on-screen overlay with skeleton and four region labels]`

Pose tracking, the part that mattered

I used MoveNet SinglePose from TF Hub. Lightning for speed. Thunder if the tracker lost me. The camera frame is letterboxed to square for the model and keypoints are mapped back to the original size. That mapping mattered. Early on I drew keypoints in the wrong place because I ignored padding.

To keep a steady 30 FPS, I drew to the window every few frames but ran inference every frame. Putting the FPS counter outside the draw throttle helped me see what was actually happening. Constant light helped more than any parameter change.

Serial and timing problems I hit

The Arduino listens for one-byte commands over USB serial. At first I had manual hits and the clocked pattern sending from different threads. Bytes interleaved and some hits vanished. I wrapped serial writes in a single locked function and added a green “beat flash” box on screen. If the box blinked and the drum didn’t hit, I knew it wasn’t software.

Autobeat, kept as an option

There is a simple pattern feature. Kick, kick, snare, rest. It turns on if both arms are held up for a moment. While it’s on, one arm stays up as an anchor and the other controls tempo by wrist height. BPM updates only on beat edges with a small deadband. No stutter. It is secondary to manual play, but useful in a demo.

Mechanics

Each voice is a drumstick on a hinge. I used two L-brackets and a through-bolt. A 12 V solenoid pulls a short wire tied a few inches from the pivot. Small travel, fast tip. Each instrument wanted a slightly different tie point and stop, so I adjusted by eye. Strike pulses are about 40 ms. That is enough to be audible and keeps the coils cool.

Everything is mounted to a 24 inch round wood base. Tallest drum is about 13 inches. The assembly is about 20 pounds. The camera sits at the center of the board, facing the viewer.

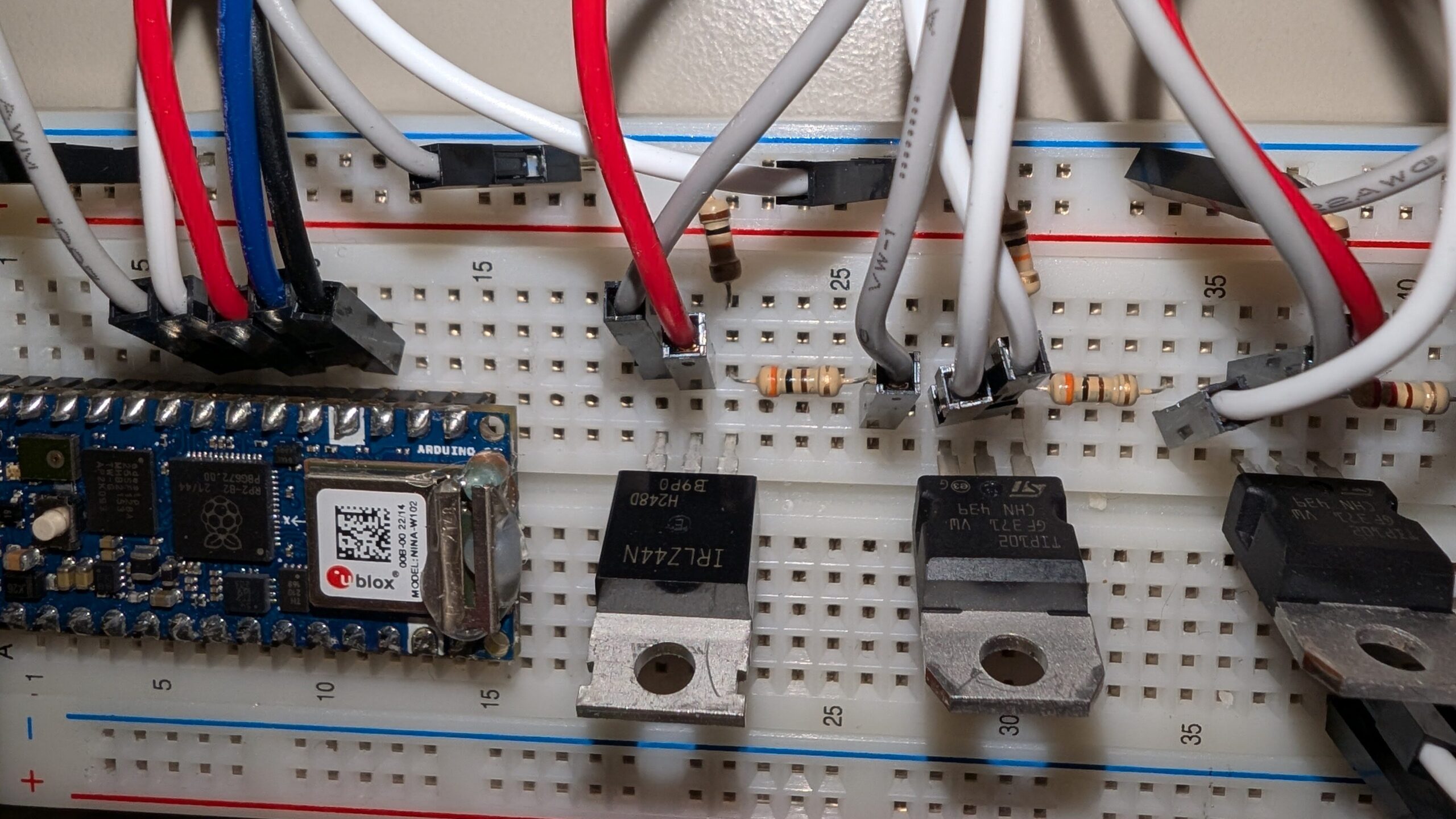

Electronics

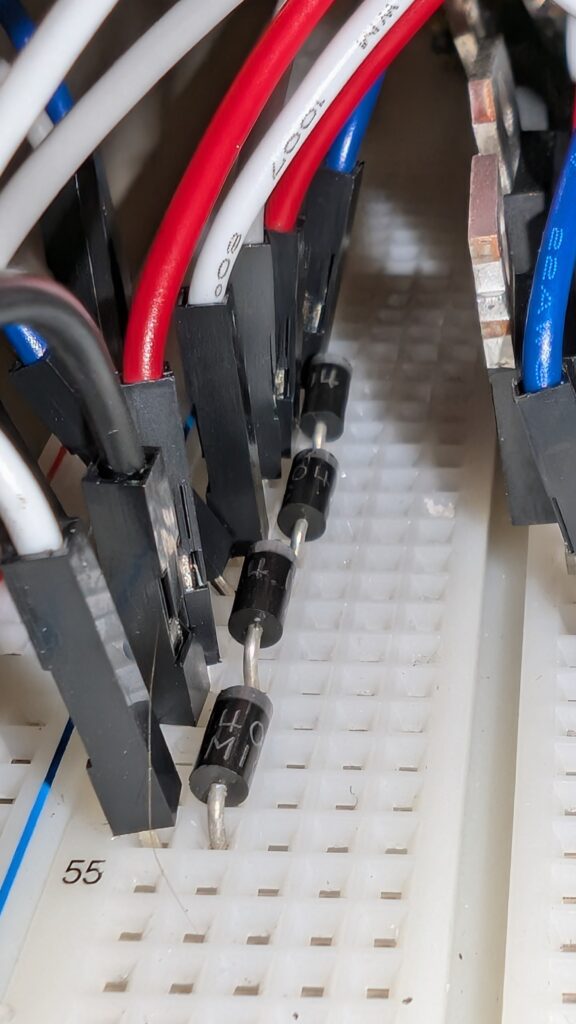

Arduino on pins 2–5. Logic-level N-MOSFET per solenoid. 100 Ω gate resistor. 100 kΩ pull-down. Flyback diode from drain to +12 V. One 12 V supply sized for two simultaneous hits. I used RCA connectors so I could swap instruments quickly. Not ideal for power, but fine for short pulses.

Installation

Viewer stands in front. About six feet of space is comfortable. The laptop runs out of sight within six feet of the base so the USB cable reaches the Arduino. One nearby AC outlet is enough. Two is nicer if the laptop isn’t on battery.

What changed after people touched it

- People wanted quick repeats. I tuned the dwell and neutral thresholds until repeats felt natural without chatter.

- False triggers during tempo adjustment were annoying. I mute manual hits when an arm is “up” for tempo.

- Loose motion was more fun than precise motion. I set the angle and extension thresholds so it accepts approximate moves without crosstalk.

What I would change next

- Replace RCA with a connector made for power, or add per-channel polyfuses.

- Add a simple on-screen threshold wizard to fit different bodies and camera heights.

- Try a second camera to reduce occlusion.

- Consider a fifth gesture for choke or mute.

Arduino sketch

// FourSolenoids.ino

// Pins: 2=K, 3=S, 4=H, 5=C

const uint8_t PIN_K = 2;

const uint8_t PIN_S = 3;

const uint8_t PIN_H = 4;

const uint8_t PIN_C = 5;

const uint16_t PULSE_MS = 40; // solenoid on time

const uint16_t COOLDOWN_MS = 80; // minimum gap between hits per voice

struct Voice {

uint8_t pin;

bool active;

unsigned long t_on;

unsigned long t_last;

} vK{PIN_K,false,0,0}, vS{PIN_S,false,0,0}, vH{PIN_H,false,0,0}, vC{PIN_C,false,0,0};

void trigger(Voice &v) {

unsigned long now = millis();

if (!v.active && (now - v.t_last) >= COOLDOWN_MS) {

digitalWrite(v.pin, HIGH);

v.active = true; v.t_on = now; v.t_last = now;

}

}

void setup() {

pinMode(PIN_K, OUTPUT);

pinMode(PIN_S, OUTPUT);

pinMode(PIN_H, OUTPUT);

pinMode(PIN_C, OUTPUT);

digitalWrite(PIN_K, LOW);

digitalWrite(PIN_S, LOW);

digitalWrite(PIN_H, LOW);

digitalWrite(PIN_C, LOW);

Serial.begin(115200);

}

void loop() {

while (Serial.available() > 0) {

char c = (char)Serial.read();

if (c=='K') trigger(vK);

else if (c=='S') trigger(vS);

else if (c=='H') trigger(vH);

else if (c=='C') trigger(vC);

}

unsigned long now = millis();

auto service = [&](Voice &v){

if (v.active && (now - v.t_on) >= PULSE_MS) { digitalWrite(v.pin, LOW); v.active=false; }

};

service(vK); service(vS); service(vH); service(vC);

}

Links and media

- Code: GitHub

If you want any details not covered here, check the code comments in the repo. The behavior you feel comes from a few thresholds and two short timers, not magic.